The Epistemic Firewall (Part One)

The first section of a primer on the personal and collective maintenance of Cognitive Security in the age of mass distributed digital noise

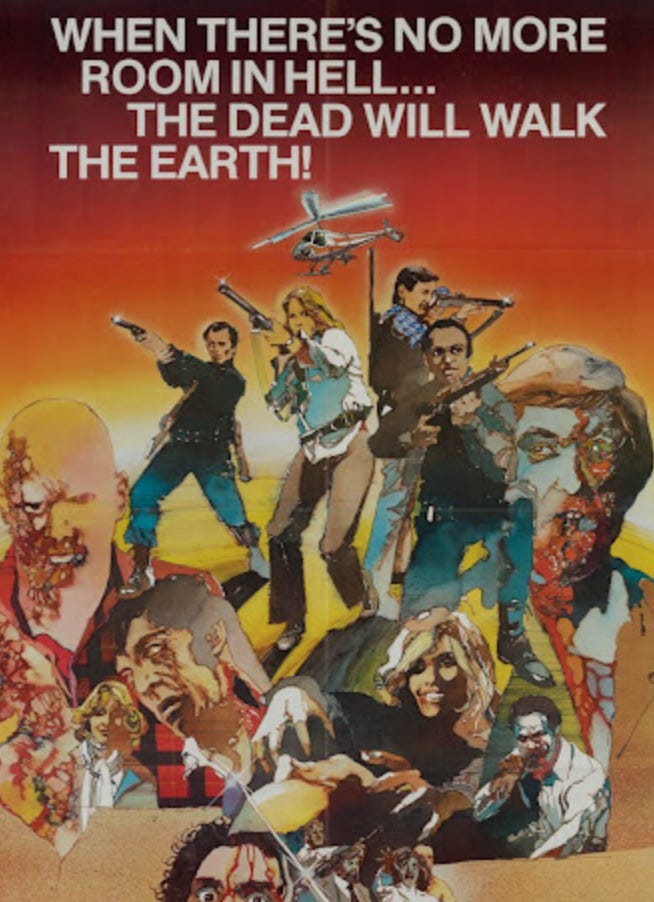

Picture a sprawling, abandoned mall like in Dawn of the Dead, or a post-apocalyptic grocery store like in 28 Days Later. You’re a survivor, you were smart enough to make it this far and you’re hungry.

Lucky for you, every stall is piled high with glittering objects, but this time it’s not lighter fluid, hunting rifles, nor irradiated apples.

Instead, the shelves contain AI chatbots, gene-editing kits, open-source models, cheap rockets, kits to rewire whole industries.

2025 is more than halfway over and the doors to the future are similarly wide open.

The bounty is real. The opportunity is intoxicating.

But let’s not forget, something else prowls the corridors:

Zombies.

Not reanimated undead corpses… but tireless AI agents, sloppy automation, and dulled human minds moving like ghosts through an echoing arcade.

This is the world you live in now: abundance and peril lurk together until the fighter jets sight us from overhead.

If you want to come out of this mall not empty-handed, but still human, you must build an Epistemic Firewall: a daily, practical architecture that protects the sovereignty of your mind.

Let this be your survival kit.

In this expanded primer, the Spacers Guild will teach you everything you need to know to begin flourishing in this new world, dangerous yet ripe with possibility.

Let’s begin.

The New Battlefield

For most of human history information was scarce. Your brain learned to treat every new signal as important, an advantage on the savanna.

Today that wiring is a liability.

We live in an age of information deluge: infinite content vying for your limited attention.

Platforms, built to harvest clicks, exploit the brain’s appetite for novelty with infinite scroll, intermittent rewards, and outrage-as-chemistry.

On top of that, powerful AI systems have become seductive mirrors.

Large Language Models (ChatGPT and its successors, and the new generation of open weight models like DeepSeek and Llama) speak with the fluency of an oracle. They mix real insight with confident hallucination.

When a machine looks, talks, and reasons like a person, remembering that it is only a reflection of aggregated human text becomes dangerously easy to forget. The result is that misinformation and delusions proliferate and manufactured consensus is presented as effective propaganda to the masses.

Add to this the “bot problem”: armies of dumb, relentless agents (autonomous email writers, scraping bots, hiring assistants) and humans who outsource thinking to them until curiosity atrophies.

The mall glitters, but it’s laced with traps, and the horde is still out there.

Abundance is real and so is risk.

The question becomes not whether the Technological Singularity or the New Space Race will change everything… they will… but how we will steer that change.

The answer starts with your mind.

What an Epistemic Firewall does

An epistemic firewall is a living set of habits, institutional practices, and technical patterns that together:

keep you from mistaking confident-sounding output for truth;

slow your reactions so your judgments don’t run on autopilot;

bias your attention toward primary sources, depth, and accountability; and

strengthen your social networks so they’re resilient to factionalization.

In short: you make your own mind the deliberate pilot of your life. It is the key to total personal self-actualization, the only way, in fact.

This is the work you must do.

The toolset you must master

1. The Attention Engine

To date, our social media platforms are optimized for engagement, not truth. Notifications and emotion-rich hooks are engineered to trip your primitive reward system.

The result is shallow, constant distraction.

2. The AI Mirror

LLMs mirror collective language. They are superb at style and plausible-sounding reasoning, and terrible at being accurate oracles. They hallucinate; they omit provenance; they amplify bias.

3. The Agent Swarm

Autonomous bots and agents scale relentlessly and cheaply. Used well they’re tools. Used badly they swamp workflows, amplify scams, and create noisy apparitions of consensus.

To Build the Firewall: Practical Daily Protocols

Below are practical, repeatable habits—your defensive architecture. Start with these and make them rituals.

1) Verify, then trust

Treat every claim, especially AI output, as a working hypothesis.

Ask: how can I independently verify this?

Use primary sources (papers, datasets, raw transcripts), not summaries of summaries.

Starter actions

If an AI or thread makes a factual claim, pause and search for two independent primary sources before accepting it.

Keep a bookmarks folder for “go-to primary sources” (arXiv, PubMed, official data portals).

2) Interrogate the signal

Always ask: who benefits if this is believed? What’s omitted? What incentive shapes this message?

A checklist

Source name and track record?

Incentives or funding disclosed?

Evidence provided, and is it primary?

Counter-arguments and context present?

3) Curate your information diet

Stop grazing. Schedule focused sessions for news, and reserve deep reading for long-form materials. Swap short, reactive consumption for long, reflective work.

Tactics

Use site blockers or timed windows (e.g., two 30-minute social sessions per day).

Read full papers or primary documents once a week.

Subscribe to curated research digests rather than feeds.

4) Practice digital silence and deep processing

Your brain needs blank, device-free time to consolidate learning and notice patterns.

Tactics

Daily 60–90 minutes of uninterrupted, device-free time: reading, walking, journaling, exercise, meditation.

Weekly “Cognitive Sabbath”: 24–48 hours with no algorithmic feeds before major decisions.

5) Calibrate emotions; delay response

Emotional surges are the attack vector. Let adrenaline subside before replying.

Rules

The 24-hour rule: for non-urgent provocation, do not respond for at least 24 hours.

If you must post, draft, sleep on it, then review.

6) Keep humans in the loop for judgment

Use AI for grunt work—summaries, data cleaning—but require human sign-off on interpretation, policy, hiring, and moral judgments.

Team design

AI writes the draft; a human referee verifies sources, context, and values alignment before publication.

Image: Dawn of the Dead (1978) Movie Poster

Organizational Practices: How Groups Maintain Cognitive Security

Individuals can do a lot, but group structure is necessary to scale cognitive health.

A. Codify a “Digital Conduct” pledge

Short, enforceable rules for internal channels: require source links, prohibit anonymous claims without evidence, demand civil critique protocols.

B. Coherence Dashboard: measure what matters

Build simple metrics to assess memetic health.

Core KPIs

Signal Density: % of posts with primary-source citations.

Constructive Ratio: % of threads that end with a proposed action, synthesis, or clarified stance vs. those that devolve into personal attacks.

Consensus Latency: time from proposal to ratified plan on contentious issues.

Re-engagement Rate: % of participants who remain active 7 days after a contested thread.

Stewardship Quotient: contributors creating or maintaining shared assets vs. passive consumers.

Implement this with basic analytics on Discord/Slack + simple NLP topic classification. Use dashboards to inform mentors and council — not to shame.

C. Peer review and “sage referees”/moderators

Create structured critique sessions where members present evidence, not rhetoric. Rotate impartial moderators to mediate.

D. Mental-health liaisons

Train a few members to recognize signs of obsession or “ChatGPT psychosis” (e.g., isolation, attributing agency to AI, loss of real-world ties) and to trigger confidential peer review or referral.

Training Modules and Field Exercises

You don’t learn an Epistemic Firewall by reading once. Build training with drills and simulations:

Hallucination Drills: conversations with intentionally misleading bots; trainees must find and document hallucinations and propose verification steps.

Prompt-Injection Labs: show how poorly sanitized inputs cause model corruption; teach input sanitization and model interrogation.

Human-AI Team Exercises: pair humans with agents; humans must audit outputs, find failure modes, and defend decisions under pressure.

Run debriefs with mentors to convert mistakes into epistemic updates.

Human–AI Symbiosis

Don’t build a master-slave relationship with AIs. Here are some first-principles for finding a safer, more collaborative path:

Non-reduction: humans are not data farms; AI is not a soul.

Purpose alignment: AI objectives must be aligned to human flourishing and mission-critical values.

Recursive feedback: both parties must be structured to learn from the other’s critiques.

Epistemic humility: AI signals uncertainty; humans acknowledge limitations.

Psychological firewalls: guard against emotional transfer to machines.

Rights of withdrawal: either party must be able to disengage; dependency is a failure.

Turn this into an auditable “contract” for every major AI deployment.

Policy & Civic Moves (What Communities Should Push For)

Open models & audits: champion transparency—open weights, independent audits, reproducible evaluation.

Data portability and ownership: refuse a future where rentier platforms own human attention and identity.

Oversight boards: multi-disciplinary panels to review high-stakes deployments.

Education investment: scale AI literacy—spot hallucinations, demand provenance, resist tribal capture.

These are civic shields; they protect the commons so no single mall-owner controls the stalls.

The Digital Vanguard: Next Steps

for those who want to lead

If you want to be part of the force that “seizes the mall” and keeps it human:

Teach an AI workshop: whether casually to friends/family, a formal lecture, or a group activity (hallucination spotting, source verification)

Push for open-source tools in your circle; host code reviews and safety checks.

Create common-sense personal rituals: weekly “Mental Battery Check”, monthly “Cognitive Fast” before major decisions, and the 24-hour post-debate cooldown periods.

Measurement: How You’ll Know It’s Working

You’ll see three things change first:

conversations become slower and deeper (longer threads with citations and fewer flame-outs);

decisions become faster to implement once consensus forms (shorter Consensus Latency but with better outcomes);

people stay in community after hard debates (higher Re-engagement Rate).

Those are signs your firewall is an engine of clarity.

Why This is a Craft

We live in a sci-fi bazaar of wonder: open-source models, genetic engineering, reusable rockets, and advanced robotics. So, too, do we swim in an ocean of information, of communication, of artificiality and noise that cloaks the nature under the shroud.

Post-apocalyptic? Certainly! And the treasures out there are real.

The mall of the Technological Singularity is ours to claim. But we would do well to be as vigilant, inventive, and resourceful as possible.

You can be a scavenger in this mall, or you can be its steward, and build for a better tomorrow… Walking Dead-style, perhaps.

More on that next time.

The Epistemic Firewall is the set of habits and institutions that turns abundance into civilization instead of chaos.

Build it for yourself.

Build it for your teams, your friends, your family.

Build it into your clubs, schools, and other organizations.

Build Your Epistemic Firewall

Brendon Carpenter

Los Angeles August 2025